Commentary •

The Virtual Lab in Nature

The Virtual Lab in Nature

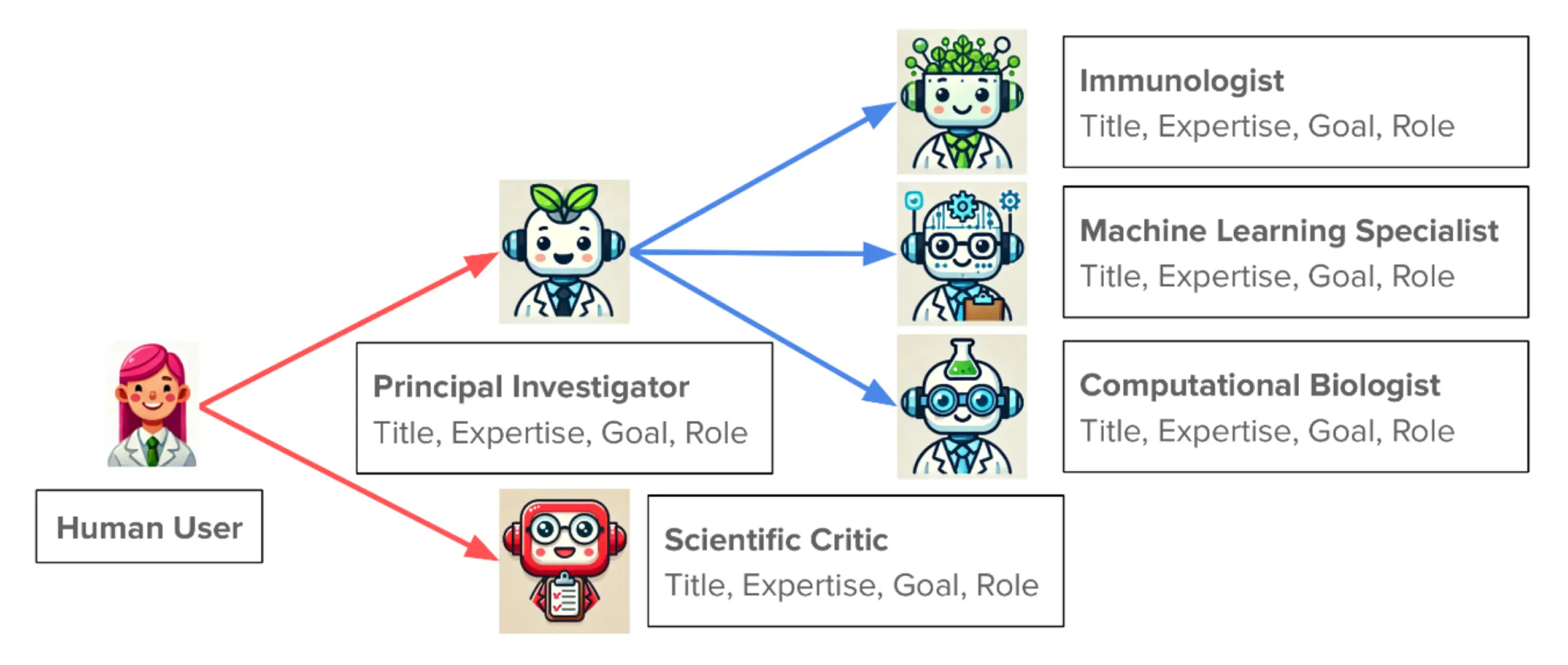

In July, researchers at Stanford University and the Chan Zuckerberg Biohub in San Francisco published a paper in Nature describing what they called a “Virtual Lab,” an experiment in orchestrating a team of artificial intelligence agents to perform scientific roles. The system was built hierarchically: a “Principal Investigator” agent defined research goals, a “Scientific Critic” agent evaluated proposals, and specialized “Researcher” agents (for example, an immunologist) were tasked with designing and refining nanobody (which are single-domain antibodies) candidates. These agents interacted through two modes: group meetings, in which multiple AI “scientists” discussed a problem collectively, and one-on-one meetings, in which a researcher agent pursued a specific task with feedback from the critic. Once prompted with a general agenda, the agents required little additional input from human supervisors, generating hypotheses and iterating largely on their own.

Applied to SARS-CoV-2, the coronavirus responsible for COVID-19, the Virtual Lab produced dozens of nanobody designs, of which a small subset was synthesized and tested by human researchers. Only a handful showed binding to circulating variants of the virus. The result was modest, but the framing was not: a laboratory of AI “scientists” functioning with minimal human guidance.

What made it publishable was the orchestration: the image of a laboratory staffed by machines, a vision at once futuristic and managerial. That distinction matters. The paper demonstrates that AI can perform familiar tasks quicker and at lower marginal cost than human researchers; it does not demonstrate that AI can perform tasks we could not otherwise have accomplished. The excitement, then, lies in the promise that science can be rendered faster, cheaper, and more “accessible.” That promise is precisely what should trouble us. Efficiency is not synonymous with discovery, and when institutions begin to conflate the two, science is no longer pursued for understanding but managed for throughput.

The Commodification of Science

The Virtual Lab is best understood in the context of the political economy in which it was produced. For decades, universities have drifted toward the logic of the corporation: knowledge is treated as a product, students as consumers, and laboratories as factories to be rationalized. In such a framework, an automated system that claims to reproduce the coordinated labor of principal investigators, graduate students, postdoctoral fellows, and staff scientists is attractive precisely because it promises lower costs and greater predictability in labor relations. Replacing human scientists with AI agents is, from the market’s perspective, the sensible choice: the AI is cheaper, will never unionize, and can work around the clock without pause. The ethic that once shaped laboratories, that training, mentorship, and the slow accumulation of skill are goods in themselves, is displaced by an ethic of efficiency, where the central measure of success is output per dollar invested.

Moreover, the adoption of AI systems obscures the very labor on which science depends. Authorship and accountability are unsettled when a “PI agent” sets goals, a “critic” agent evaluates proposals, and a “researcher” agent designs molecules, while human scientists are reduced to synthesizing and testing what the system has produced. The more credit accrues to the architecture of AI models, the less visible becomes the judgment, troubleshooting, and validation carried out by human scientists. This erasure is the logic of commodification. What is presented as an efficiency gain is, in fact, the absorption of human labor into the background of capitalist production.

Seen together, these dynamics reframe the existential purpose of the academic laboratory. Once the site of mentorship and the cultivation of scientific intuition, it is reimagined as a managerial space where scientists supervise black boxes and efficiency metrics stand in for discovery. Tacit skills, once valued as the very heart of scientific practice, are displaced by automated systems that promise speed. The spectacle of the Virtual Lab, then, lies in the way it reconfigures science into a process of invisible labor and visible management, knowledge as product, efficiency as ethic.

The Friedman Defense and the Fault Line

To this critique, a free-market economist might respond with what we could call “the Friedman Defense.” If the Virtual Lab reduces costs and accelerates discovery, then resources are freed for other projects. Universities that operate more like businesses allocate resources more rationally. Consumers, conceived here as patients, ultimately benefit when therapies arrive faster and at lower cost. In this account, efficiency is itself a moral good, because it maximizes the public welfare.

There is something persuasive in this logic. During a pandemic, speed can undoubtedly save lives. A technology that compresses the timeline from hypothesis to therapy is not trivial, and it would be irresponsible to dismiss this acceleration. The problem arises when efficiency is elevated from a useful instrument in times of crisis into the governing principle of science itself. When that shift occurs, efficiency reorganizes the labor of science entirely. Highly trained PhDs conducting original research are replaced with technicians executing standardized assays, while day-to-day operations fall increasingly under administrators with little or no scientific background. This is already visible in the modern university, where directors and other layers of management without disciplinary expertise set the priorities for fields they do not practice. The result is a loss of intellectual depth, and subsequently, a loss of safety and reliability. Technicians without advanced training are less able to recognize when an assay has failed, to identify anomalies in data, or to challenge the assumptions embedded in a model's output. Managers focused on metrics rather than understanding are more likely to push projects toward short-term profits at the expense of care. Efficiency achieved through deskilling thus produces its own inefficiencies: experiments that must be repeated, errors that go unnoticed until too late, and discoveries that prove brittle when tested outside the controlled environment of the model.

The deeper flaw lies in the mythology of the market itself. Markets are never truly free: they are structured by institutions, shaped by policy, and skewed toward the interests of those with capital to invest. To speak of them as neutral allocators of resources is to ignore the political economy that determines which drugs are pursued, which diseases are neglected, and which lives are valued. The Virtual Lab, like other technologies before it, will be deployed where it promises returns, not where it promises novel scientific understanding.

The result is misallocation. We already know this story: billions flow into lifestyle drugs and therapies with massive markets while basic virology and pandemic preparedness scrape by on limited funds. If scientific direction is ceded to consumer demand, we will discover more ways to combat acne and fewer ways to prevent the next pandemic. In this process, scientists themselves are alienated from the work of discovery. No longer the authors of knowledge, they become monitors of automated systems, estranged from both the product of their labor and the purpose of their craft.

This is the fault line in the Friedman Defense: markets reward what sells, not what sustains. To confuse efficiency with progress is to abandon precisely those areas of science that markets will never fund adequately: basic research, preparedness, and the slow work of understanding.

AI as an Instrument

To resist commodification is not to reject pragmatic instruments. The Virtual Lab, like many technologies before it, can accelerate routine tasks and broaden the repertoire of methods available to scientists. But tools must remain tools. They can support judgment, but they must not replace it.

AI has already proven its worth in areas like medical diagnostics, where it acts not as a substitute for expertise but as a force multiplier. A landmark study in Germany found that AI-supported mammography flagged one additional case of cancer per 1,000 women screened, all without increasing unnecessary recalls. Overall, AI-assisted screening improved breast cancer detection by as much as 18% while keeping false-positive rates steady, and has reduced clinicians’ workload by 40%, all without compromising accuracy. In another recent deployment across Northwestern Medicine’s 11-hospital network, a generative AI tool enhanced radiology report productivity by up to 40%, with radiologists maintaining diagnostic quality.

These examples show that AI can deepen rigor where patterns are complex and volumes are high by alerting experts to subtle signals, reducing procedural drift, and freeing attention for more interpretive, critical judgment. These models exist to help humans see more clearly and reach farther than before, without replacing human responsibility.

Conclusion

The Virtual Lab is a striking demonstration, but its significance lies less in what it discovered than in what it reveals about the trajectory of science under the sway of market efficiency. If adopted as a justification for cutting labor and treating discovery as a commodity, it will hollow out training, obscure responsibility, and accelerate the misallocation of research. Efficiency is valuable only when subordinated to the deeper aims of science: understanding, responsibility, and the pursuit of knowledge as a public good. AI can serve those aims, but only if we insist that it remain an instrument and not a solution.